Description

This is a lower division undergraduate philosophy course intended for both philosophy majors and nonmajors. It discusses some philosophical issues broadly related to artificial intelligence (AI) and machine learning (ML). The course begins with an introduction to the historical and philosophical development of the idea of AI. The second part of the course introduces some contemporary AI/ML systems and discusses some epistemological issues that they raise. The final part of the course discusses the interaction between AI/ML and the human society, including some ethical issues relevant to AI/ML. Throughout the course, participants will be introduced to some monumental ideas in mathematics, philosophy, and computer science.

Outline

Unit 1: Philosophy of AI

This unit introduces some central topics of this class: what is AI? What is a computer? Can computer programs possibly display intelligence? What is the measure of intelligence? How can we build such programs?

We start with Turing’s seminal paper where these questions are first proposed and discussed. We move on to introduce the first research program that aims to implement AI on computers — logicist AI — and Dreyfus’s criticism of this program. The conceptual and practical difficulties faced by logicist AI lead us to wonder whether there can be AI systems not based on a priori given logical rules, but instead learns from experience. This thought leads the fundamental philosophical dichotomy between empiricism and rationalism. We then introduce two problems that an empiricist AI system faces (both philosophically and practically) — Hume’s problem of induction and Goodman’s new riddle of induction.

We also introduce some perspectives from mathematicians and computer scientists. In light of the Church–Turing thesis and the undecidability of the halting problem, are there mathematical limitations on the power of AI? Are insights from computational complexity theory and formal learning theory helpful for us to understand the nature of intelligence and learning from experience?

Click for detailed reading list

| Reading | Author | Notes |

| “Computing Machinery and Intelligence”, 1950 | Turing | |

| What Computers Still Can’t Do, 1992 | Dreyfus | The class discusses the concluding chapter of the book. The introduction to the second edition contains an interesting discussion of the then emerging statistical approach to AI. |

| An Enquiry Concerning Human Understanding, 1748 | Hume | |

| “The New Riddle of Induction”, 1954 | Goodman | |

| “On the Question of Whether the Mind Can be Mechanized”, 2018 | Koellner | |

| “Why Philosophers Should Care About Computational Complexity Theory”, 2011 | Aaronson | The class discusses the sections related to the Turing test and the problem of induction. |

Unit 2: Philosophy of Machine Learning

This unit begins with a nontechnical introduction of machine learning algorithms and their applications. We move on to discuss three philosophical topics: a) whether language models can have language understanding; b) the contrast between symbolic/logicist AI and statistical AI; c) the interpretability of statistical AI.

Click for detailed reading list

| Reading | Author | Notes |

| “Vector Semantics and Embeddings”, in Speech and Language Processing, 2024 | Jurafsky and Martin | |

| “Deep Learning”, 2015 | LeCun, Bengio and Hinton | |

| “A Philosophical Introduction to Large Language Models”, 2024 | Milli`ere and Buckner | |

| “Building Machines that Learn and Think Like People”, 2017 | Lake et al. | |

| “The Next Decade in AI: Four Steps Towards Robust Artificial Intelligence”, 2020 | Marcus | |

| “On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?”, 2021 | Bender et al. | |

| The Alignment Problem: Machine Learning and Human Values, 2020 | Christian | We use Chapter 3 “Transparency” to introduce the issue of AI interpretability. |

| “The Right to Explanation”, 2021 | Vredenburgh | |

| “Transparency is Surveillance”, 2021 | Nguyen |

Unit 3: AI Ethics

In this unit we discuss discuss two ethical topics related to AI: a) the manifestation of biases in machine learning based decision making systems; b) whether “`the possibility of “singularity” or an AI system with superhuman intelligence should be a big ethical concern.

Click for detailed reading list

| Reading | Author | Notes |

| “Machine Bias”, 2016 | ProPublica | |

| “Race and Gender” in The Oxford Handbook of Ethics of AI, 2020 | Gebru | |

| “Inherent Trade-Offs in the Fair Determination of Risk Scores”, 2016 | Kleinberg et al. | |

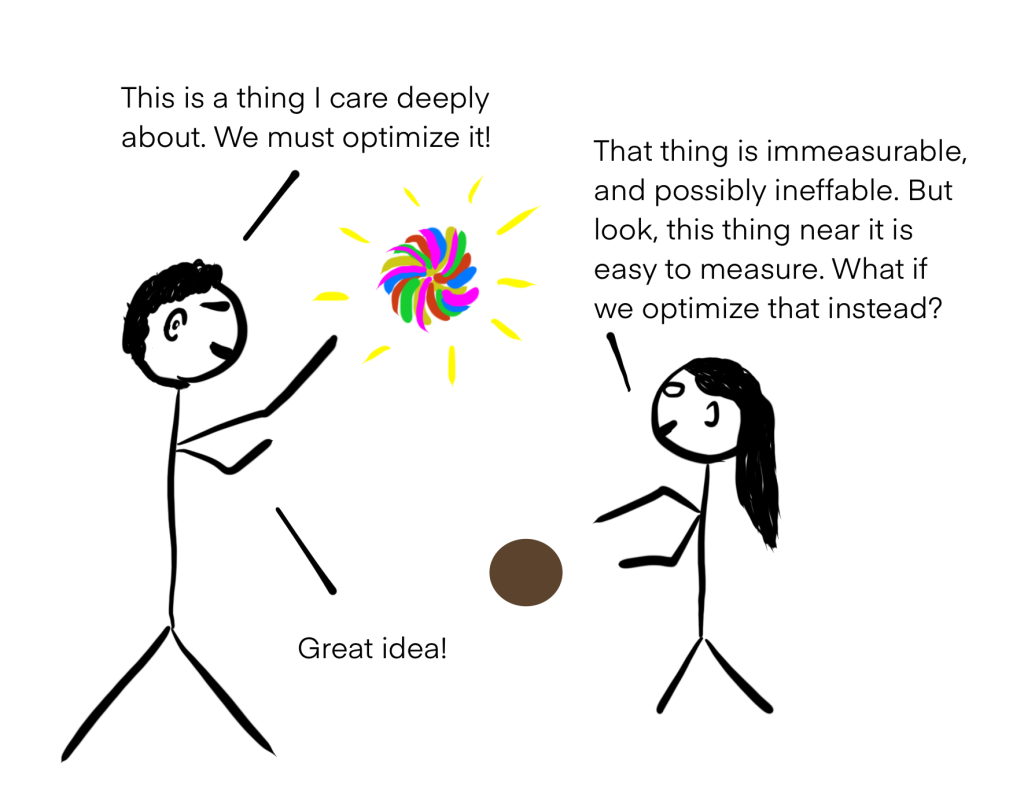

| “Against Predictive Optimization”, 2023 | Wang et al. | |

| “The Singularity: A Philosophical Analysis”, 2010 | Chalmers | |

| “Against the Singularity Hypothesis”, 2024 | Thorsdad |

Acknowledgements

- Thanks to Andy Yang and Ced Zhang who agreed to give guest lectures in this iteration of the course!

- Thanks to Ced Zhang, Boyuan Chen, and Yixiu Zhao who gave valuable advice in designing this course.