Welcome to the University of Notre Dame Robotics and Autonomy Research (ROAR) Lab! Our lab focuses on developing practical, computationally-efficient, and provably-correct algorithms that prepare autonomous systems to be cognizant, taskable, and adaptive, and can collaborate with humans to co-exist in a complex, ever-changing and unknown environment.

Our Research

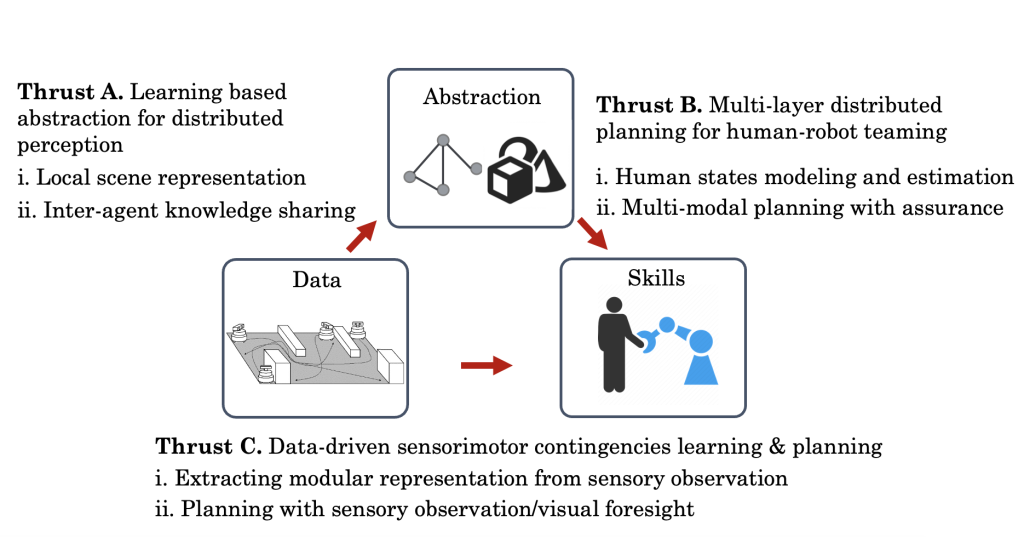

To enable exploration of unstructured and dynamic open worlds, robotic systems have to collaborate with human operators to co-exist in a complex, ever-changing and unknown environment, and should feature behaviors that are cognizant, adaptive, and taskable: the robots need to be aware of their capabilities, identify the changes in environmental dynamics, learn from past experiences to improve system performance, and understand high-level instructions to plan multi-modal strategies that are dependent on the context in which the system is operating. Such features result in the following research questions

– Cognizant: how to represent the agent’s knowledge in an unstructured environment, without a pre-defined set of scene parameters?

– Taskable: how to efficiently discover useful multi-modal distributed strategies for human-robot teams?

– Adaptive: how to learn from past sensory data to build skills that can adapt over time to the particularities of the environment?

In this context, our research focuses on foundational advances in robotics and autonomy. I aim to devise practical, computationally-efficient, and provably-correct algorithms that prepare autonomous systems working synergistically with human operators to explore unknown, unstructured and dynamic environments. I will also seek to develop robotic platforms to validate the autonomy algorithms. The underlying hypothesis in my research is that the interactions between agents and the environment provide rich information – on one hand, the robot can leverage its actions and observed effects to train a high-fidelity prediction model (Thrust A). The learned model enables planning and control synergies of interaction policy for human-robot team (Thrust B). On the other hand, the robot can also use the historical perception data to directly learn the skills to achieve efficient sensorimotor understanding and planning (Thrust C).

Please visit lab Youtube channel to learn more about our research!