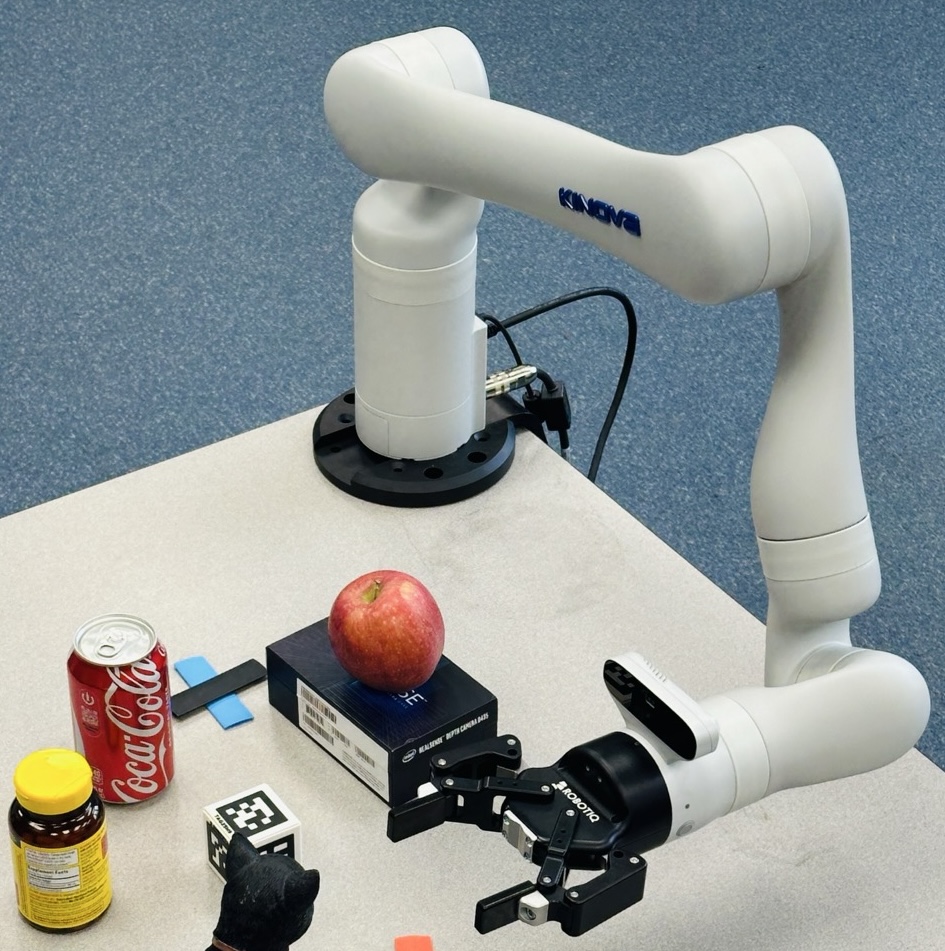

We aim to develop an uncertainty-aware robotic grasping framework that leverages a variational 3D Gaussian Splatting (3DGS) model for active perception. By sampling multiple rendered RGB–depth pairs from the uncertainty-aware 3DGS scene representation, which captures model uncertainty due to limited training data, we feed these samples into a grasp planning network (GraspNet). The variance across the resulting pickup scores provides a quantitative measure of grasp stability, enabling the robot to select the next best pick based on both predicted success and uncertainty. This approach integrates Bayesian 3D scene modeling with grasp planning to achieve more reliable and adaptive robotic manipulation.