Ambrose, G. Alex, Duan, Xiaojing, Abbott, Kevin, Woodard, Victoria (2019) “Inclusive Learning Analytics to Improve STEM Student Success.” EDUCAUSE Main Conference, Chicago, IL

Click here to download the

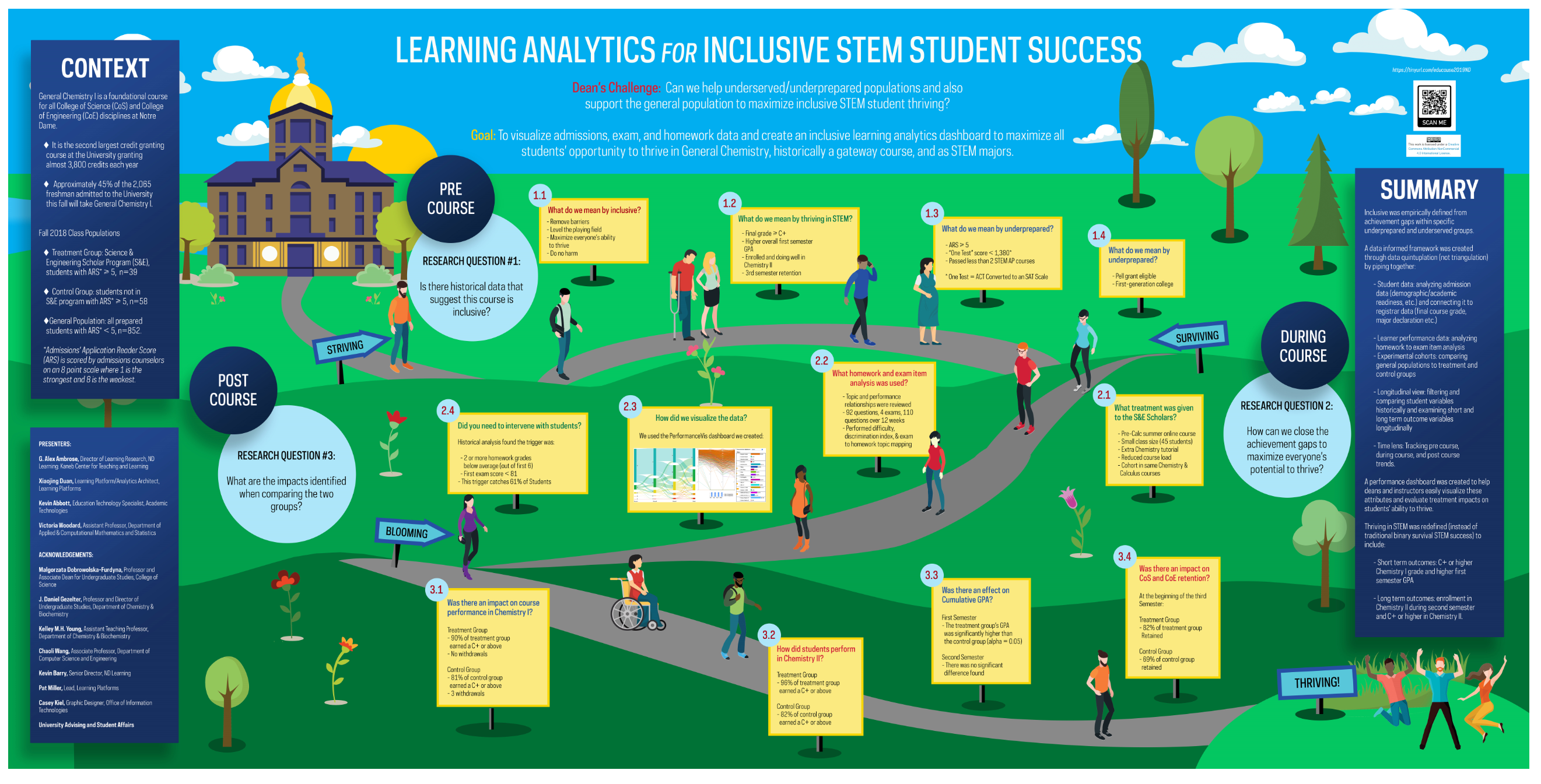

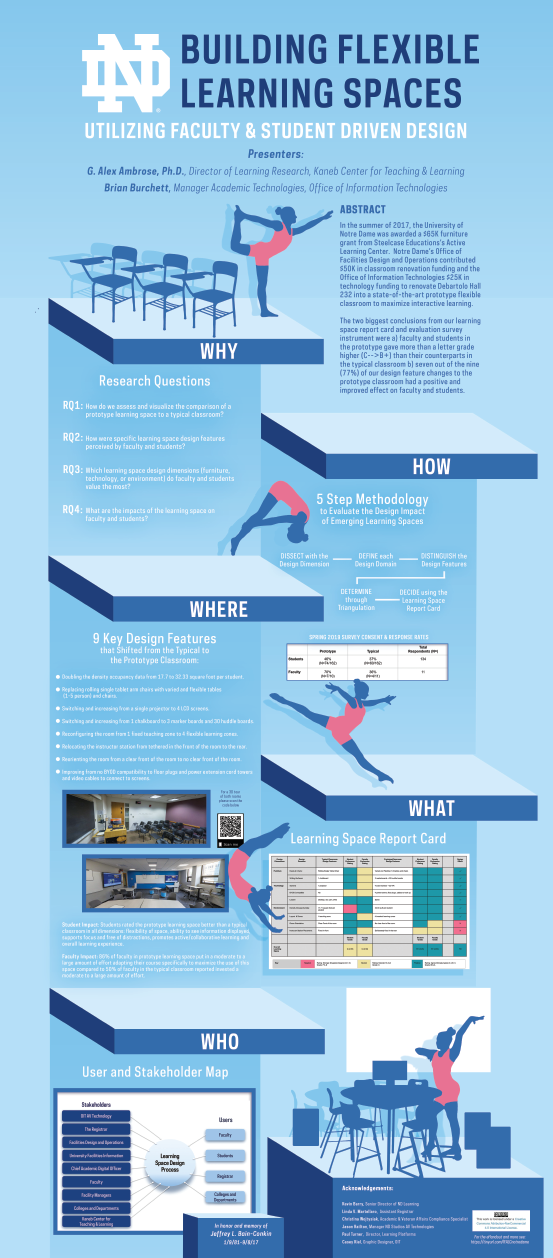

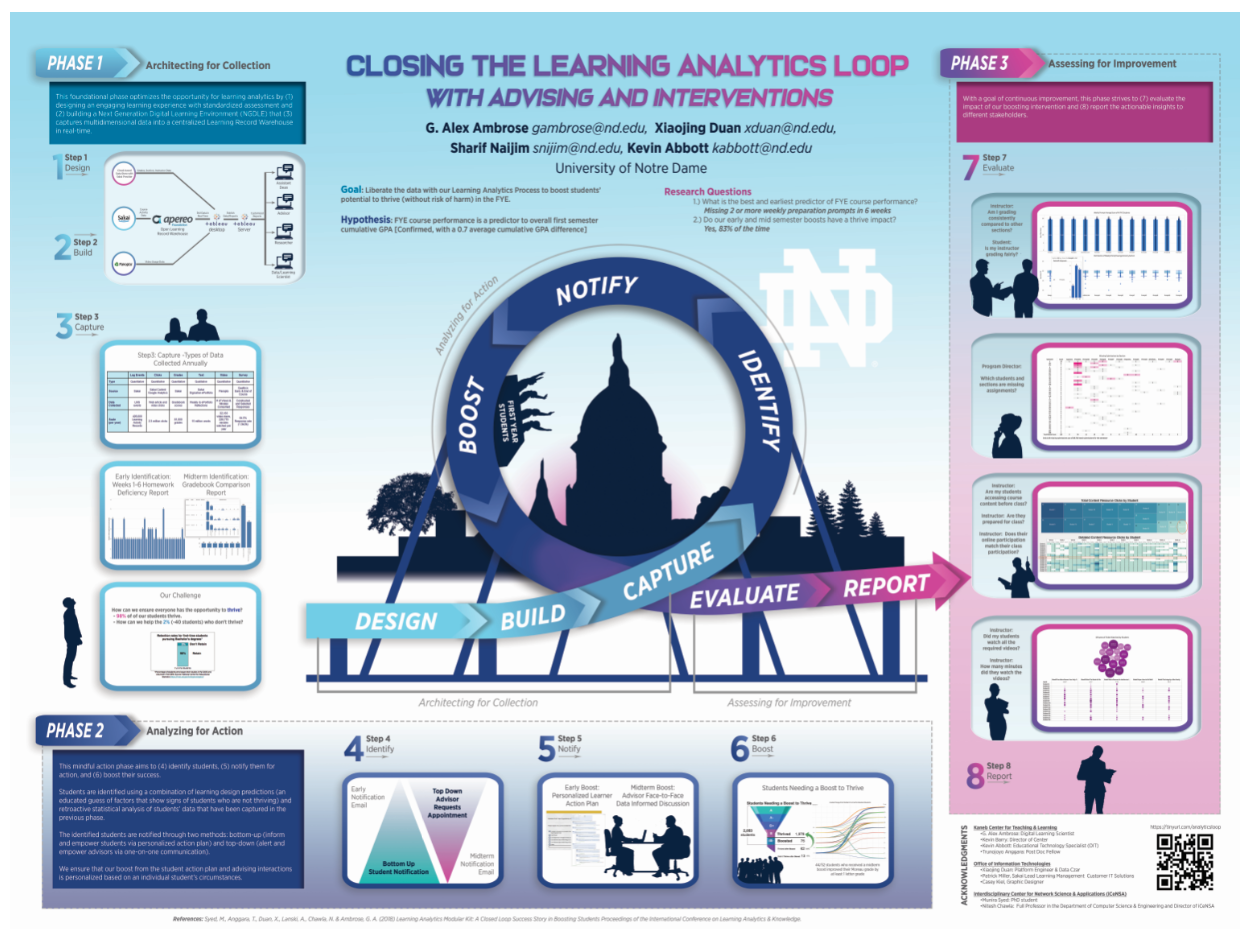

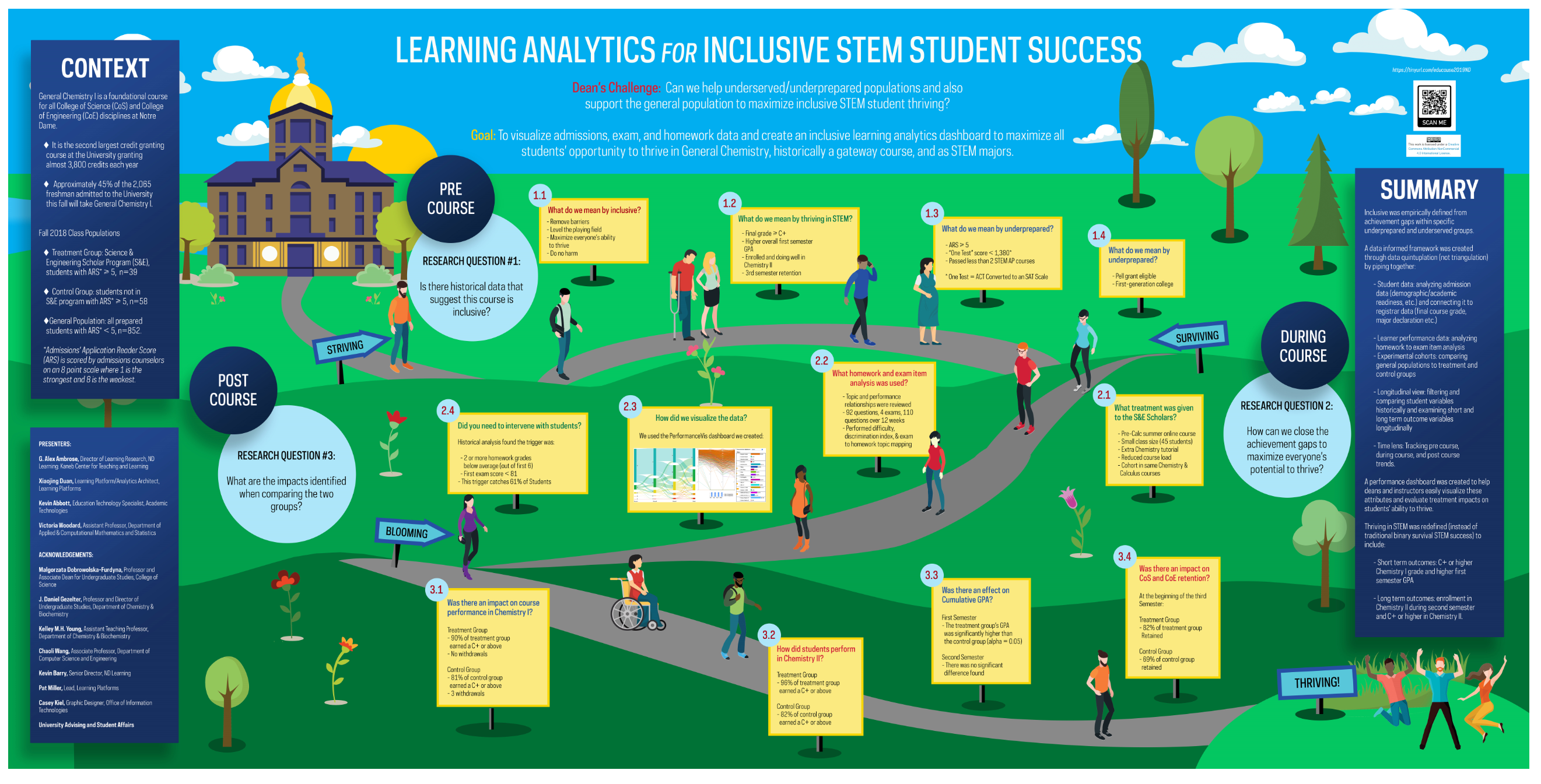

The goal of this project is to ensure our first year students in gateway courses are leaving Science, Technology, Engineering, and Mathematics (STEM) based majors based on a discernment decision not because they were not adequately prepared or lacked recognition of needed intervention, in courses typically consider gateways. The key questions this project is pursuing are: (1) What research, assessment, advising, and course design questions can we ask and answer from the course and student data capture that will support student success? (2) How to cross-reference student learning activity, homework analytics, and exam item analysis to help instructors improve course and assessment design? (3) Can we use analytics data to boost all students’ potential to thrive and improve stem success.

Our Chemistry courses were designed to meet that challenge and help all of our 953 (46% of all first-year student). The learning environment infrastructure was architected to follow the NGDLE interoperability, integration, analytics, advising, and learning assessment dimensions so multidimensional student data can be collected seamlessly to enable comprehensive data analysis and improvement assessment (Ambrose, Abbott, & Lanski, 2017).

Our poster will describe how we built an innovative and transparent learning environment, embedded data collection in course design, captured data from multiple sources into the centralized learning records warehouse (Miller & Duan, 2018), used predictive analytics to support students identified as underperforming to thriving, answered key questions through visualization reports, and identified what is the best and earliest predictor of student success.

Related Educause Review articles that we published on this work:

https://er.educause.edu/blogs/2018/1/ngdle-learning-analytics-gaining-a-360-degree-view-of-learning

https://er.educause.edu/articles/2017/7/under-the-hood-of-a-next-generation-digital-learning-environment-in-progress