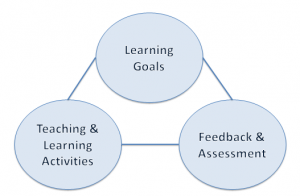

In her recent two-part workshop series, Amy Buchmann–a Graduate Associate of the Kaneb Center for Teaching and Learning–discussed some of the fundamentals of course design. An integrated course design (Fink, 2003) has three primary elements: (1) learning goals, (2) feedback and assessment, and (3) teaching and learning activities.

Figure 1. Integrated course design model. Fink, L. D. (2003). Creating significant learning experiences: An integrated approach to designing college courses. San Francisco, CA: Jossey-Bass.

The first installment of the workshop focused on crafting learning goals and effective feedback and assessment strategies. Using a technique described by Bain (2004) as planning backwards, there are a series of activities which can incorporate these primary elements into a cohesive course design. First, drafting “big questions” (Bain, 2004; Huston, 2012) about what you want students to gain by the end of the course, such as what questions would students be able to answer, or what skills, abilities, or qualities would they develop throughout the course.

Using those “big questions,” it becomes possible to construct a list of learning goals for students (e.g., students will be able to X by the end of this course), which can subsequently be revised to include specific language. For example, rather than saying “students will understand X,” more active and specific language may include words like, “students will predict X” or “students will differentiate X and Y” or “students will be able to generate X” (more helpful examples on how to do this available on p. 7 of this handout). Once these learning goals are revised, each learning goal can be transformed into a graded assessment or a chance to provide students with feedback. Angelo and Cross (1993) provided a list of course assessment techniques or (CATs) which serve as a guide. Using your learning goals to create course assessments ensures that what you hope students will take away from your class is what students actually are doing in the course.

The second installment of this workshop focused on crafting a learner-centered syllabus, which includes formulating teaching and learning activities. For many instructors, the easiest way to develop a course is to first decide what texts or topics to cover in lectures, then to arrange exams and assignments around these. However, these “text/lecture-focused courses” often fail to incorporate learning goals. Therefore, designing your course with an “assignment focus” may allow for a more integrated course design. Using those learning goals that you previously established and revised, you can build your course one learner-centered assignment at a time. While doing so, there are three questions to keep in mind: (1) “Are the assignments likely to result in the learning you want?”, (2) “Is the assignment aligned with the learning goals?”, and (3) “Is the workload appropriate?” Once you have figured out which assessments to include, it becomes easier to craft your syllabus.

The syllabus is where all three primary elements of the integrated course design come together. In general, the syllabus serves a variety of functions, not the least of which is that it acts as a “contract” between the instructor and the student (Slattery and Carlson, 2005). As instructors, the syllabus serves as a planning tool for the semester (and helps us to meet course goals in a timely manner) and helps to set the class tone. For students, the syllabus helps to structure their workload and inform them about course policies. Thus, the best syllabi will communicate your expectations, emphasize student responsibility, and answer student questions before they ask.

In order to accomplish these tasks, well-designed syllabi often include some common elements. Though not an exhaustive list, common elements include: contact information, course description/goals, student learning goals, materials, schedule or calendar, requirements/responsibilities, policies, and grading info. It may also be a good idea build flexibility into the schedule, but Huston (2012) has some suggestions for how to do so. For instance, using a phrase like “The instructor reserves the right to change the syllabus at any time” may appear disorganized or suggest to students that you may not honor the “contract” between the two of you. Instead, diplomatic wording might include “your learning is my principal concern, so I may modify the schedule if it will facilitate your learning” or “we may discover that we want to spend more time on certain topics and less time on others. I’ll consider changing the schedule if such a change would benefit most students’ learning in this course.” These phrasings inform students that any changes are for their benefit and that the instructor truly cares about student learning.

Taking all of these fundamentals of course design into account, you can construct an organized, assessment-focused course with student learning in mind. To learn about this and other Kaneb Center events visit our workshop series page.