It’s pretty easy to get yourself an Amazon Web Services account, log into the web console, and start firing up instances left and right. It feels awesome, and it should, because you are doing a lot of cool things with just a few mouse clicks. Soon, however, that buzz is going to wear off, because working this way is not sustainable at scale, and it is not easily repeatable. If you want to get to #DevOps nirvana, creating and destroying instances automatically with each branch commit, you are going to have to abandon that GUI and get to the command line.

So you google up “aws command line,” and behold: AWS Command Line Interface. These tools are great, and probably worth a blog post in themselves. They are an attempt to provide a unified CLI to replace prior offerings that were unique to each service (e.g., an ec2 tool, a CloudFormation tool), and they let you do quite a bit from the command line. But wait, because if you want to control everything in AWS while also leveraging the power of a mature scripting language, you want Boto for Python.

First, we’ll need python. Mac and Linux users are good to go; Windows users will need to install it first. We’re also going to need the python package manager, called pip. For some reason it doesn’t come with python, but there’s a python tool that’ll fetch it for us. This worked on my mac:

$ sudo easy_install pip

Okay, now you’d better learn some basic Python syntax. Go ahead; I’ll wait.

Good. Now we’re ready. Install the boto SDK with pip:

$ pip install boto

Then create a file called .boto in your home directory with the following contents:

[Credentials]

aws_access_key_id = YOUR_ACCESS_KEY_ID

aws_secret_access_key = YOUR_SECRET_ACCESS_KEY

Where to get those credentials? I’ll let this guy tell you.

Now we’re ready for some examples. Readers in the OIT can check out the full scripts here. Let’s start by making a Virtual Private Cloud, aka a virtual data center. VPCs are constructs that, despite their relative importance in a cloud infrastructure, are quite simple to create. From create_vpc.py:

#!/usr/bin/python

import boto

from boto.vpc import VPCConnection

You’ve got the typical shebang directive, which invokes the python interpreter and lets us execute the script directly. Then the important part: importing boto. The first import is really all you need, but if you want to be able to reference parts of the boto library without fully qualifying them, you’ll want to do something like line 3 there. This lets us reference “VPCConnection” instead of having to say “boto.vpc.VPCConnection.”

The parameters for creating a VPC are…

- name: friendly name for the VPC (we’ll use VPC_ND_Datacenter. This is actually optional, but we’re going to tag the VPC with a “Name” key after we make it)

- cidr_block: a Classless Inter-Domain Routing block, of course (alright, Bob Winding helped me. I’ll update with clarification later) Example: 10.0.0.0/16

- tenancy: default / dedicated. Dedicated requires you to pay a flat fee to Amazon, but it means you never have to worry that you’re sharing hardware with another AWS customer.

- dry_run: set to True if you want to run the command without persisting the result

Basically the cidr_block is the only thing you really even need. I told you it was a simple construct! Note that variables exist for each in the create_vpc line below.

c = VPCConnection()

datacenters = c.get_all_vpcs(filters=[("cidrBlock", cidr)])

if not len(datacenters):

datacenter = c.create_vpc( cidr_block=cidr, instance_tenancy=tenancy, dry_run=dryrun )

print datacenter

else:

datacenter = datacenters.pop(0)

print "Requested VPC already exists!"

datacenter.add_tag("Name", "My awesome VPC")

First, get the VPCConnection object, which has the methods we’ll use to list/create a VPC. Note that this is available due to the “from..import” above. Next, use the method “get_all_vpcs” with a filter to check that no VPC with this cidr block already exists.

If that list is empty, we’ll call create_vpc. Otherwise, we’ll print a message that it already exists. We can also pop the first item off the list, and that’s the object representing the existing VPC. Finally, we’ll add a tag to name our VPC.

This stuff is that easy.

Once you divide that VPC into subnets and create a security group or two, how about creating an actual ec2 instance?

ec2 = EC2Connection()

reservation = new_instance ec2.run_instances(

image_id='ami-83e4bcea',

subnet_id='MY_SUBNET_ID',

instance_type='t1.micro',

security_group_ids=['SOME_SECURITYGROUP_ID', 'ANOTHER_ONE')

reservation.instances[0].add_tag("Name", "world's greatest ec2 instance")

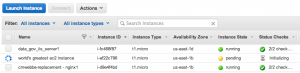

Similar setup here. We’re using the EC2Connection object instead. Note that the run_instances method doesn’t pass back the instance directly, but gives you a “reservation” object that can apparently have an array of instances. AFAIK you can only create one at a time with this method. Anyway, we tag it and boom! Here’s our instance, initializing:

and to think you wanted to click buttons

We’ve got more work to do before we can create this instance and provision it via puppet, deploy applications on it, run services, and make it available as a Notre Dame domain. Still, this is a great start. Maybe some OIT folks want to jump in and help! Talk to me, and be sure to check out the full boto API reference for all available methods.