A while back, Scott Kirner handed responsibility for the CITS (nee ES) Tech Sessions to me. With all the technology changes happening in OIT right now, there are plenty of exciting topics to learn and discuss. So far in 2014, we have had presentations on the following:

- Responsive Web Design

- Provisioning a Rails development environment with Vagrant

- Git / GitHub basics (thanks Peter Wells!)

Having been justly called out for not providing access to my presentation material, I will now play catch-up and share some slides! Be aware that these decks only provide partial information; each meeting had a significant live demo component. They probably need some tweaking and they definitely need context. For my part, I have planned for weeks to write detailed blog posts on each topic (especially the second one, as I had hardly any time to discuss capistrano). I seem to be writing a lot today, so maybe I’ll get to them soon. It’s important to share this information broadly!

For now, try this: the CITS Tech Session resource folder. Everything’s in there, but let me provide some details.

- Responsive Web Design slides

- Demo for this was pretty bare-bones. I put it into GitHub. Hopefully it makes some sense…

- Rails deployment in Vagrant

- the most underserved probably. Lots of good info on vagrant, but not detailed enough on the puppet / capistrano part.

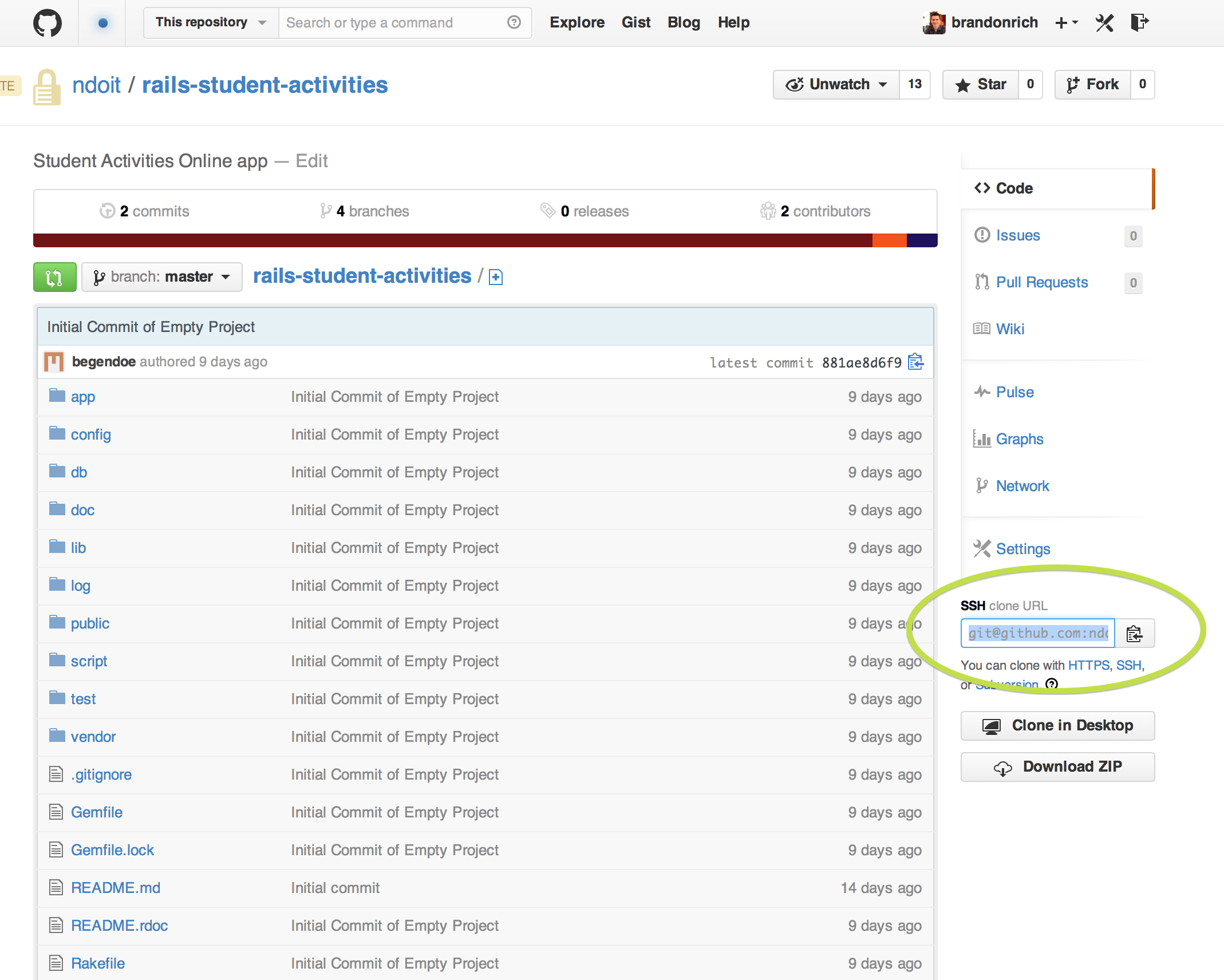

- Git repo for the vagrantfile that builds the rails/nginx/unicorn stack (+ oracle client)

- Git repo for the main manifest

- The modules used by this manifest are all downloaded in the shell provisioner of that vagrantfile, so you can see them there. They’re all in NDOIT public repos.

- Git repo for the CAS Rails test app — authenticates a user to CAS from the root page, then displays some CAS metadata.

- The Vagrantfile used to actually download and deploy that app automatically, but I have removed that step.

- This probably deserves three different blogs posts

- The puppet modules and CAS test app are extended from code started by Peter Wells!

- Rails deployment — not a CITS tech session, but it describes a progression on the work from #2, above. I demoed a remote deployment to a totally fresh machine with a “deploy” user and and /apps directory — much like we might do in production.

- This presentation was aimed at Ops staff, so I get into the stack a bit more.

- I also created an “autodeploy” script to wrap capistrano, to try to show one way in which our current RFC process could accommodate such deployment mechanisms. I hope for something even more flexible in the future.

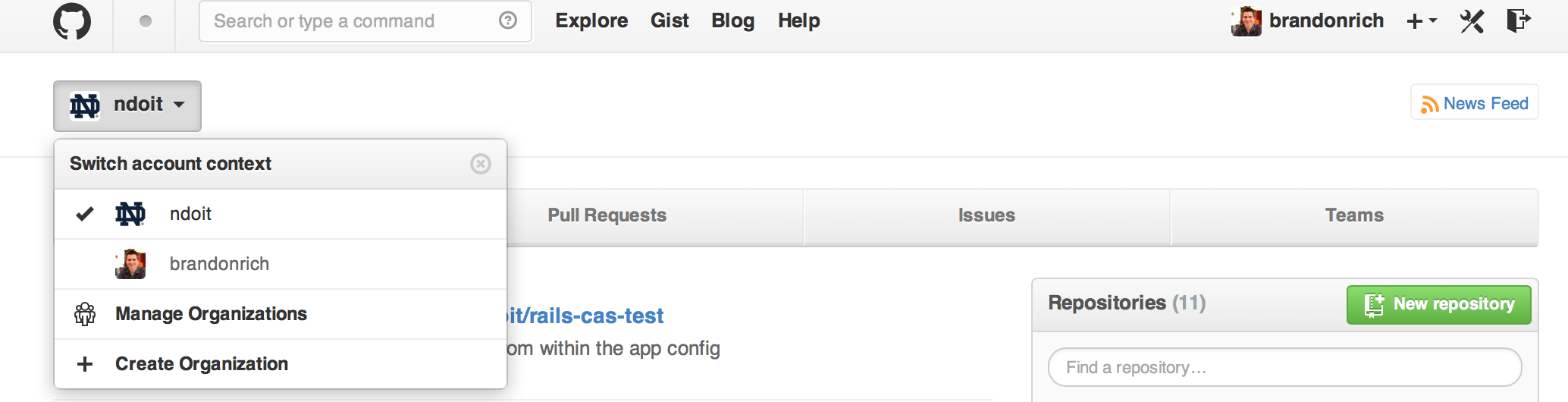

No slides from today, but my last two blog posts will provide some information about the GitHub part. If you want to learn Git, the official site has some great documentation. Here are Git Basics and Basic Branching and Merging. Git’s easy branching is one of the most interesting and exciting parts of working with Git, and will be the foundation for multi-developer coding in the future.

As I have mentioned elsewhere, I know not everyone can make each session. Blog posts will certainly help make the content accessible, but in addition, I am 100% open to doing recap sessions if there are enough people who want it! Heck, I’ll even sit down with you one-on-one. So please reach out to me. The more we can share our combined knowledge, the better developers we’ll be.