Archive for the ‘Uncategorized’ Category

Catholic Pamphlets and practice workflow

Posted on September 27, 2011 in UncategorizedThe Catholic Pamphlets Project has past its first milestone, specifically, practicing with its workflow which included digitizing and making accessible thirty-ish pamphlets in the Libraries’s catalog, “discovery system”, and implementing a text mining interface. This blog posting describes this success in greater detail.

For the past four months or so a growing number of us have been working on a thing affectionately called the Catholic Pamphlets Project. To one degree or another, these people have included:

Aaron Bales • Adam Heet • Denise Massa • Eileen Laskowski • Jean McManus • Julie Arnott • Lisa Stienbarger • Lou Jordan • Mark Dehmlow • Mary McKeown • Natalia Lyandres • Pat Lawton • Rejesh Balekai • Rick Johnson • Robert Fox • Sherri Jones

Our long-term goal is to digitize the set of 5,000 locally held Catholic pamphlets, save them in the library’s repository, update the catalog and “discovery system” (Primo) to include links to digital versions of the content, and provide rudimentary text mining services against the lot. The short-term goal is/was to apply these processes to 30 of the 5,000 pamphlets. And I am happy to say that as of Wednesday (September 21) we completed our short-term goal.

The Hesburgh Libraries owns approximately 5,000 Catholic pamphlets — a set of physically smaller rather than larger publications dating from the early 1800s to the present day. All of these items are located in the Libraries’s Special Collection Department, and all of them have been individually cataloged.

As a part of a university (President’s Circle) grant, we endeavored to scan these documents, convert them into PDF files, save them to our institutional repository, enhance their bibliographic records, make them accessible through our catalog and “discovery system”, and provide text mining services against them. To date we have digitized just less than 400 pamphlets. Each page of each pamphlet has been scanned and saved as a TIFF file. The TIFF files were concatenated, converted into PDF files, and OCR’ed. The sum total of disk space consumed by this content is close to 92GB.

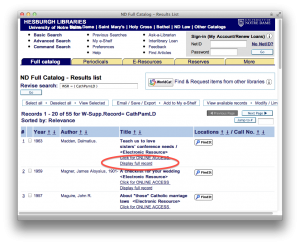

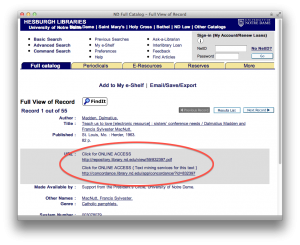

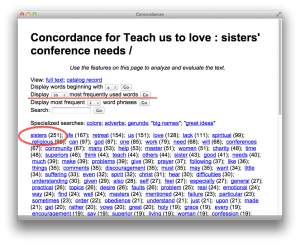

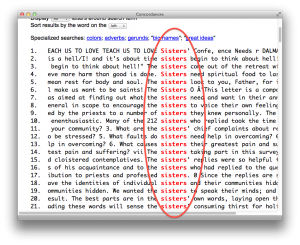

In order to practice with workflow, we selected about 30 of these pamphlets and enhanced their bibliographic records to denote their digital nature. These enhancements included URLs pointing to PDF versions of the pamphlets as well as URLs pointing to the text mining interfaces. When the enhancements were done we added them to the catalog. Once there they “flowed” to the “discovery system” (Primo). You can see these records from the following URL — http://bit.ly/qcnGNB. At the same time we extracted the plain text from the PDFs and made them accessible via a text mining interface allowing the reader to see what words/phrases are most commonly used in individual pamphlets. The text mining interface also includes a concordance — http://concordance.library.nd.edu/app/. These later services are implemented as a means of demonstrating how library catalogs can evolve from inventory lists to tools for use & understanding.

While the practice may seem all but trivial, it required about three months of time. Between vacations, conferences, other priorities, and minor glitches the process took more time than originally planned. The biggest glitch was with Internet Explorer. We saved our PDF files in Fedora. Easy. Each PDF file had a URL coming from Fedora which we put into the cataloging records. But alas, Internet Explorer was not able to process the Fedora URLs because: 1) Fedora was not pointing to files but data streams, and/or 2) Fedora was not including an HTTP header called “filename disposition” which includes a file name extension. No other browsers we tested had these limitations. Consequently we (Rob Fox) wrote a bit of middleware taking a URL as input, getting the content from Fedora, and passing it back to the browser. Problem solved. This was a hack for sure. “Thank you, Rob!”

We presently have no plans (resources) to digitize the balance of the pamphlets, but it is my personal hope we process (catalog, store, and make accessible via text mining) the remaining 325 pamphlets before Christmas. Wish us luck.

Catholic Youth Literature Project update

Posted on August 31, 2011 in UncategorizedThis is a tiny Catholic Youth Literature Project update.

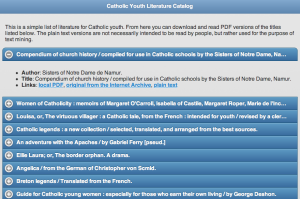

Using a Perl module called Lingua::Fathom I calculated the size (in words) and readability scores of all the documents in the Project. I then updated the underlying MyLibrary database with these values, as well as wrote them back out again to the “catalog”. Additionally, I spent time implementing the concordance. The interface is not very iPad-like right now, but that will come. The following (tiny) screen shots illustrate the fruits of my labors.

Give it a whirl and tell me what you think, but remember, it is designed for iPad-like devices.

Catholic Youth Literature Project: A Beginning

Posted on August 27, 2011 in UncategorizedThis posting outlines some of the beginnings behind the Catholic Youth Literature Project.

The Catholic Youth Literature Project is about digitizing, teaching, and learning from public domain literature from the 1800’s intended for Catholic children. The idea is to bring together some of this literature, make it easily available for downloading and reading, and enable learners to “read” texts in new & different ways. I am working with Jean McManus, Pat Lawton, and Sean O’Brien on this Project. My specific tasks are to:

- assemble a corpus of documents, in this case about 50 PDF files of books written for Catholic children from the 1800’s

- “catalog” the each item in the corpus and describe them using author names, titles, size (measured in words), readability (measured by grade level, etc.), statistically significant key words, names & places programmatically extracted from the texts, and dates

- enable to learners to download the book and read it in the traditional manner

- provide “services against the texts” where these services include things such as but not limited to: list the most frequently used words or phrases in the book, list all the words starting with a given letter, chart & graph where those words and phrases exist in the text, employ a concordance against the texts so the reader can see how the words are used in context, list all the names & places from the text an allow the reader to look them up in Wikipedia as well as plot them on a world map, programmatically summarize the book, extract all the date-related values from the book and plot the result on a timeline, tabulate the parts-of-speech (nouns, verbs, adjectives, etc.) in a document and graph the result, and provide the means for centrally discussing the content of the books with fellow learners

- finally, provide all of these services on an iPad

Written (and spoken) language follow sets of loosely defined rules. If this were not the case, then none of us would be able to understand one another. If I have digital versions of books, I can use a computer to extract and tabulate the words/phrases it contains, once that is done I can then look for patterns or anomalies. For example, I might use these tools to see how Thoreau uses the word “woodchuck” in Walden. When I do I see that he doesn’t like woodchucks because they eat his beans. In addition, I can see how Thoreau used the word “woodchuck” in a different book and literally see how he used it differently. In the second book he discusses woodchucks in relation to other small animals. A reader could learn these things through the traditional reading process, but the time and effort to do so is laborious. These tools will enable the reader to do such things across many books at the same time.

In the Spring O’Brien is teaching a class on children and Catholicism. He will be using my tool as a part of his class.

I do not advocate this tool as a replacement for traditional “close” reading. This is a supplement. It is an addition. These tools are analogous to tables-of-contents and back-of-the-book indexes. Just because a person reads those things does not mean they understand the book. Similarly, just because they use my tools does not mean they know what the book contains.

I have created the simplest of “catalogs” so far, and here is screen dump:

You can also try the “catalog” for yourself, but remember, the interface is designed for iPads (and other Webkit-based browsers). Your milage may vary.

Wish us luck, and ‘more later.

Pot-Luck Picnic and Mini-Disc Golf Tournament

Posted on August 22, 2011 in UncategorizedThe 5th Annual Hesburgh Libraries Pot-Luck Picnic And Mini-Disc Tournament was an unqualified success. Around seventy-five people met to share a meal and themselves. I believe this was the biggest year for the tournament with about a dozen teams represented. Team Hanstra took away the trophy after a sudden death playoff against Team Procurement. Both teams had scores of 20. “Congrats, Team Hanstra! See you next year.”

From the picnic’s opening words:

Libraries are not about collections. Libraries are not about public service. Libraries are not about buildings and spaces. Libraries are not about books, journals, licensed content, nor computers. Instead, libraries are about what happens when all of these things are brought together into a coherent whole. None of these things are more important than the others. None of them come before the others. They are all equally important. They all have more things in common than differences.

That is what this picnic is all about. It is about sharing time together and appreciating our similarities. Only through working together as a whole will we be able to accomplish our goal — providing excellent library services to this, the great University of Notre Dame.

Gotta go. Gotta throw.

Code4Lib Midwest: A Travelogue

Posted on August 13, 2011 in UncategorizedThis is a travelogue documenting my experiences at second Code4Lib Midwest Meeting (July 28 & 29, 2011) at the University of Illinois, Chicago.

Day #1

The meeting began with a presentation by Peter Schlumpf (Avanti Library Systems). In it he described and demonstrated Avanti Nova, an application used to create and maintain semantic maps. To do so, a person first creates objects denoted by strings of characters. This being Library Land, these strings of characters can be anything from books to patrons, from authors to titles, from URLs to call numbers. Next a person creates links (relationships) between objects. These links are seemingly simple. One points to another, vice versa, or the objects link to each other. The result of these two processes forms a thing Schlumpf called a relational matrix. Once the relational matrix is formed queries can be applied against it and reports can be created. Towards the end of the presentation Schlumpf demonstrated how Avanti Nova could be used to implement a library catalog as well as represent the content of a MARC record.

Robert Sandusky (University of Illinois, Chicago) shared with the audience information about a thing called the DataOne Toolkit. DataOne is a federation of data repositories including nodes such as Dryad, MNs, and UC3 Merritt. The Toolkit supports an application programmer interface to support three levels of federation compliance: read, write, and replicate. I was particularity interested in DataOne’s data life cycle: collect, assure, describe, deposit, preserve, discover, integrate, analyze, collect. I also liked the set of adjectives and processes used to describe the vision of DataOne: adaptive, cognitive, community building, data sharing, discovery & access, education & training, inclusive, informed, integrate and synthesis, resilient, scalable, and usable. Sandusky encouraged members of the audience (and libraries in general) to become members of DataOne as well as community-based repositories. He and DataOne see libraries playing a significant role when it comes to replication of research data.

Somewhere in here I, Eric Lease Morgan (University of Notre Dame), spoke to the goals of the Digital Public Library of America (DPLA) as well as outlined my particular DPLA Beta-Sprint Proposal. In short, I advocated the library community move beyond the process of find & get and towards the process of use & understanding.

Ken Irwin (Wittenberg University) gave a short & sweet lightning talk about “hacking” trivial projects. Using an example from his workplace — an application used to suggest restaurants — he described how he polished is JQuery skills and enhanced is graphic design skills. In short he said, “There is a value for working on things that are not necessarily library-related… By doing so there is less pressure to do it ‘correctly’.” I thought these were words of wisdom and point to the need for play and experimentation.

Rick Johnson (University of Notre Dame) described how he and his group are working in an environment where the demand is greater than the supply. Questions he asked of the group, in an effort to create discussion, included: how do we move from a development shop to a production shop, how do we deal with a backlog of projects, to what degree are we expected to address library problems versus university problems, to what extent should our funding be grant-supported and if highly, then what is our role in the creation of these grants. What I appreciated most about Johnson’s remarks was the following: “A library is still a library no matter what medium they collect.” I wish more of our profession expressed such sentiments.

Margaret Heller (Dominican University) asked the question, “How can we assist library students learn a bit of technology and at the same time get some work done?” To answer her question she described how her students created a voting widget, did an environmental scan, and created a list of library labs.

Christine McClure (Illinois Institute of Technology) was given the floor, and she was seeking feedback in regards to here recently launched mobile website. Working in a very small shop, she found the design process invigorating since she was not necessarily beholden to a committee for guidance. “I work very well with my boss. We discuss things, and I implement them.” Her lightning talk was the first of many which exploited JQuery and JQuery Mobile, and she advocated the one-page philosophy of Web design.

Jeremy Prevost (Northwestern University) built upon the McClure’s topic by describing how he built a mobile website using a Model View Controller (MVC) framework. Using such a framework, which is operating system and computer programming language agnostic, accepts a URL as input, performs the necessary business logic, branches according to the needs/limitations of the HTTP user-agent, and returns the results appropriately. Using MVC he is able to seamlessly provide many different interfaces to his website.

If a poll had been taken on the best talk of the Meeting, then I think Matthew Reidsma‘s (Grand Valley State University) presentation would have come out on top. In it he drove home two main points: 1) practice “progressive enhancement” Web design as opposed to “graceful degradation”, and 2) use JQuery to control the appearance and functionality of hosted Web content. In the former, single Web pages are designed in a bare bones manner, and through the use of conditional Javascript logic and cascading stylesheets the designer implements increasingly complicated pages. This approach works well for building mobile websites through full-fledged desktop browser interfaces. The second point — exploiting JQuery to control hosted pages — was very interesting. He was given access to the header and footer of hosted content (Summon). He then used JQuery’s various methods to read the body of the pages, munge it, and present more aesthetically pleasing as well as more usable pages. His technique was quite impressive. Through Reidsma’s talk I also learned the necessity of many skills to do Web work. It is not enough to know how HTML or Javascript or graphic design or database management or information architecture, etc. Instead, it is necessary to have a combination of these skills in order to really excel. To a great degree Riedsma embodied such a combination.

Francis Kayiwa (University of Illinois, Chicago) wrapped up the first day by asking the group questions about hosting and migrating applications from different domains. The responses quickly turned to things about EAD files, blogs postings, and where the financial responsibility lies when grant money dries up. Ah, Code4Lib. You gotta love it.

Day #2

The second day was given over to three one-hour presentations. The first was by Rich Wolf (University of Illinois, Chicago) who went to excruciating detail on how to design and write RESTful applications using Objective-C.

My presentation on text mining might have been as painful for others. In it I tried to describe and demonstrate how libraries could exploit the current environment to provide services against texts through text mining. Examples included the listing of n-grams and their frequencies, concordances, named-entity extractions, word associations through network diagrams, and geo-locations. The main point of the presentation was “Given the full text of documents and readily accessible computers, a person can ‘read’ and analyze a text in so many new and different ways that would not have been possible previously.”

The final presentation at the Meeting was by Margaret Kipp (University of Wisconsin Milwaukee), and it was called “Teaching Linked Data”. In it she described and demonstrated how she was teaching library school students about mash-ups. Apparently her students have very little computer experience, and the class surrounded things like the shapes of URLs, the idea of Linked Data, and descriptions of XML and other data streams like JSON. Using things like Fusion tables, Yahoo Pipes, Simile Timelines, and Google Maps students were expected to become familiar with new uses for metadata and open data. One of the nicest things I heard from Kipp was, “I was also trying to teach the students about programatic thinking.” I too think such a thing is important; I think it important to know how to think both systematically (programmatically) as well as analytically. Such thinking processes complement each other.

Summary

From my perspective, the Meeting was an unqualified success. Kudos go to Francis Kayiwa, Abigail Goben, Bob Sandusky, and Margaret Heller for organizing the logistics. Thank you! The presentations were on target. The facilites were more than adequate. The wireless network connections were robust. The conversations were apropos. The company was congenial. The price was right. Moreover, I genuinely believe everybody went away from the Meeting learning something new.

I also believe these sorts of meetings demonstrate the health and vitality of the growing Code4Lib community. The Code4Lib mailing list boasts about 2,000 subscribers who are from all over the world but mostly in the United States. Code4Lib sponsors an annual meeting and regularly occurring journal. Regional meetings, like this one in Chicago, are effective and inexpensive professional development opportunities for people who are unable or uncertain about the full-fledged conference. If these meetings continue, then I think we ought to start charging a franchise fee. (Just kidding, really.)

Trip to the Internet Archive, Fort Wayne (Indiana)

Posted on July 18, 2011 in UncategorizedThis is the tiniest of travelogues describing a field trip to a branch of the Internet Archive in Fort Wayne (Indiana), July 11, 2001.

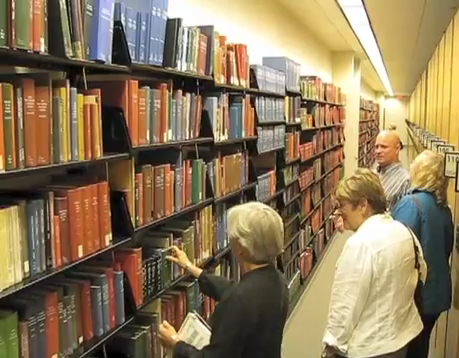

Here at the Hesburgh Libraries we are in the midst of a digitization effort affectionately called the Catholic Pamphlets Project. We have been scanning away, but at the rate we are going we will not get done until approximately 2100. Consequently we are considering the outsourcing of the scanning work, and the Internet Archive came to mind. In an effort to learn more about their operation, a number of us went to visit a branch of the Internet Archive, located at the Allen County Public Library in Fort Wayne (Indiana), on Monday, July 11, 2011.

When we arrived we were pleasantly surprised at the appearance of the newly renovated public library. Open. Clean. Lots of people. Apparently the renovation caused quite a stir. “Why spend money on a public library?” The facilities of the Archive, on the other hand, were modest. It is located in the lower level of the building with no windows, cinderblock walls, and just a tiny bit cramped.

Internet Archive, the movie! |

Pilgimage to Johnny Appleseed’s grave |

We were then given a tour of the facility and learned about the workflow. Books arrive in boxes. Each book is associated with bibliographic metadata usually found in library catalogs. Each is assigned a unique identifier. The book is then scanned in a “Scribe”, a contraption cradling the book in a V-shape while photographing each page. After the books are digitized they are put through a bit of a quality control process making sure there are no missing pages, blurry images, or pictures of thumbs. Once that is complete the book’s images and metadata are electronically sent to the Internet Archive’s “home planet” in San Francisco for post-processing. This is where the various derivatives are made. Finally, the result is indexed and posted to the ‘Net. People are then free to download the materials and do with them just about anything they desire. We have sent a number of our pamphlets to the Fort Wayne facility and you can see the result of the digitization process.

From my point of view, working with the Internet Archive sounds like a good idea, especially if one or more of their Scribes comes to live here in the Libraries. All things considered, their services are inexpensive. They have experience. They sincerely seem to have the public good at heart. They sure are more transparent than the Google Books project. Digitization by the Internet Archive may be a bit challenging when it comes to items not in the public domain, but compared to the other issues I do not think this is a very big issue.

To cap off our visit to Fort Wayne we made a pilgrimage to Johnny Appleseed’s (John Chapman’s) grave. A good time was had by all.

The Catholic Pamphlets Project at the University of Notre Dame

Posted on June 21, 2011 in UncategorizedThis posting outlines an initiative colloquially called the Catholic Pamphlets Project, and it outlines the current state of the Project.

Earlier this year the Hesburgh Libraries was awarded a “mini-grant” from University administration to digitize and make accessible a set of Catholic Americana. From the proposal:

The proposed project will enable the Libraries and Archives to apply these [digital humanities computing] techniques to key Catholic resources held by the University. This effort supports and advances the Catholic mission of the University by providing enhanced access to significant Catholic scholarship and facilitating the discovery of new knowledge. We propose to create an online collection of Catholic Americana resources and to develop and deploy an online discovery environment that allows users to search metadata and full-text documents, and provides them with tools to interact with the documents. These web-based tools will support robust keyword searching within and across texts and the ability to analyze texts and detect patterns across documents using tools such as charts, graphs, timelines, etc.

A part of this Catholic Americana collection is a sub-collection of about 5,000 Catholic pamphlets. With titles like The Catholic Factor In Urban Welfare, About Keeping Your Child Healthy, and The Formation Of Scripture the pamphlets provide a rich fodder for research. We are currently in the process of digitizing these pamphlets, thus the name, the Catholic Pamphlets Project.

While the Libraries has digitized things in the past, previous efforts have not been holistic nor as large in scope. Because of the volume of materials, in both analog and digital forms, the Catholic Pamphlets Projects is one of the larger digitization projects the Libraries has undertaken. Consequently, it involves just about every department: Collection Development, Special Collections, Preservation, Cataloging, Library Systems, and Public Services. To date, as many as twenty different people have been involved, and the number will probably grow.

What are we going to actually do? What objectives are we going to accomplish? The answer to these questions fall into four categories, listed here in no priority order:

- digitize a set of Catholic Americana – the most obvious objective

- experiment with digitizing techniques – here we are giving ourselves the opportunity to fail; we’ve never really been here before

- give interesting opportunities to graduate students – through a stipend, a junior scholar will evaluate the collection, put it into context, and survey the landscape when it comes to the digital humanities

- facilitate innovative services to readers – this will be the most innovative aspect of the Project because we will be providing a text mining interface to the digitized content

Towards these ends, a number of things have been happening. For example, catalogers have been drawing up new policies. And preservationists have been doing the same. Part-time summer help has been hired. They are located in our Art Slide Library and have digitized just less than two dozen items. As of this afternoon, summer workers in the Engineering Library are lending a scanning hand too. Folks from Collection Development are determining the copyright status of pamphlets. The Libraries is simultaneously building a relationship with the Internet Archive. A number of pamphlets have been sent to them, digitized, and returned. For a day in July a number of us plan on visiting an Internet Archive branch office in Fort Wayne to learn more. Folks from Systems have laid down the infrastructure for the text mining, a couple of text mining orientation sessions have been facilitated, and about two dozen pamphlets are available for exploration.

The Catholic Pamphlets Project is something new for the Hesburgh Libraries, and it is experiencing incremental progress.

Research Data Inventory

Posted on May 19, 2011 in UncategorizedThis is the home page for the Research Data Inventory.

If you create or manage research data here at the University, then please complete the 10-question form. It will take you less than two minutes. I promise.

Research data abounds across the University of Notre Dame. It comes in many shapes and sizes, and it comes from many diverse disciplines. In order for the University to support research it behooves us to know what data sets exist and how they are characterized. This — the Research Data Inventory — is one way to accomplish this goal.

The aggregated results of the inventory will help the University understand the breadth & depth of the local research data, set priorities, and allocate resources. The more we know about the data sets on campus, the more likely resources will be allocated to make their management easier.

Complete the inventory, and tell your colleagues about it. Your efforts are sincerely appreciated.

Data Management Day

Posted on April 30, 2011 in UncategorizedThis is the home page for the University of Notre Dame’s inaugural Data Management Day (April 25, 2011). Here you will find a thorough description of the event. In a sentence, it was a success.

Introduction

Co-sponsored by the Center for Research Computing and the Hesburgh Libraries, the purpose of Data Management Day was to raise awareness of all things research data across the Notre Dame community. This half-day event took place on Monday, April 25 from 1–5 o’clock in Room 126 of DeBartolo Hall. It brought together as many people as possible who deal with research data. The issues included but were not limited to:

- copyrights, trademarks, and patents

- data management plans

- data modeling and metadata description

- financial sustainability

- high performance computing

- licensing, distribution, and data sharing

- preservation and curation

- personal privacy and human subjects

- scholarly communication

- security, access control, and authorization

- sponsored research and funder requirements

- storage and backup

Presenters

To help get us going and to stimulate our thinking, a number of speakers shared their experience.

In “Science and Engineering need’s perspective” Edward Bensman (Civil Engineering & Geological Sciences) described how he quantified the need for research data storage & backup. He noted that people’s storage quotas were increasing at a linear rate but the need for storage was increasing at an exponential rate. In short he said, “The CRC is not sized for University demand and we need an enterprise solution.” He went on to recommend a number of things, specifically:

- review quotas and streamline the process for getting more

- consider greater amounts of collaboration

- improve campus-wide support for Mac OSX

- survey constituents for more specific needs

Charles Vardeman (Center for Research Computing) in “Data Management for Molecular Simulations” outlined the workflow of theoretical chemists and enumerated a number of models for running calculations against them. He emphasized the need to give meaning to the data and thus the employment of a metadata schema called SMILES was used in conjunction with relational database models to describe content. Vardeman concluded with a brief description of a file system-based indexing scheme that might make the storage and retrieval of information easier.

Vardeman’s abstract: Simulation algorithms are enabling scientists to ask interesting questions about molecular systems at an increasingly unmanageable rate from a data perspective. Traditional POSIX directory and file storage models are inadequate to categorize this ever increasing amount of data. Additionally, the tools for managing molecular simulation data must be highly flexible and extensible allowing unforeseen connections in the data to be elucidated. Recently, the Center for Research Computing built a simulation database to categorize data from Gaussian molecular calculations. Our experience of applying traditional database structures to this problem will be discussed highlighting the advantages and disadvantages of using such a strategy to manage molecular data.

Daniel Skendzel (Project Manager for the Digital Asset Strategy Committee) presented an overview of the work of the Digital Asset Management group in “Our Approach to Digital Asset Management”. He began by comparing digital asset management to a storage closet and then showed two different pictures of closets. One messy and another orderly. He described the University’s digital asset management system as “siloed”, and he envisioned bringing these silos together into a more coherent whole complete with suites of tools for using the assets more effectively. Skendzel compared & contrasted our strategy to Duke’s (coordinated), Yale’s (enabling), and the University of Michigan’s (integrated) noting the differences in functionality and maturity across all four. I thought his principles for cultural change — something he mentioned at the end — were most interesting:

- central advocacy

- faculty needs driven

- built on standard architecture

- flexible applications

- addresses entire life cycle

- mindful of the cultural element

Skendzel’s abstract: John Affleck-Graves and the Leadership Committee on Operational Excellence commissioned the Digital Asset Strategy Committee in May 2010 to create and administer a master plan to provide structure for managing digital content in the form of multi-media, images, specific document-types and research data. The plan will address a strategy for how we can best approach the lifecycle needs of capturing, managing, distributing and preserving our institutional digital content. This talk will focus on our progress toward a vision to enhance the value of our digital content by integrating our unique organizational culture with digital technologies.

Darren Davis (Associate Vice President for Research and Professor of Political Science) talked about the importance and role of institutional review boards in “Compliance and research data management”. He began by pointing out the long-standing issues of research and human subject noting a decades-old report outlining the challenges. He stated how the University goes well beyond the Federal guidelines, and he said the respect of the individual person is the thing the University is most interested in when it comes to these guidelines. When human subjects are involved in any study, he said, it is very important for the subjects to understand what information is being gleaned from them, the compensation they will receive from the process, and that their services are being given willingly. When licensing data from human subject research confidentiality is an ever-present challenge, and the data needs to be de-identifiable. Moreover, the licensed data can not be repurposed. Finally, Davis said he and the Office of Research will help faculty create data management plans and they look to expand these service offerings accordingly.

Davis’s abstract: Advances in technology have enabled investigators to explore new avenues of research, enhance productivity, and use data in ways unimagined before. However, the application of new technologies has the potential to create unanticipated compliance problems regarding what constitutes human subject research, confidentiality, and consent.

In “From Design to Archiving: Managing Multi-Site, Longitudinal Data in Psychology” Jennifer Burke (Research Assistant Professor of Psychology & Associate Director of the Center for Children and Families) gave an overview of the process she uses to manage her research data. She strongly advocated planning that includes storage, security, back-up, unit analysis, language, etc. Her data comes in all formats: paper, electronic, audio/video. She designs and builds her data sets sometimes in rows & columns and sometimes as linked relational databases. She is mindful of file naming conventions and the use of labeling conventions (her term for “metadata”). There is lots of data-entry, data clean-up, and sometimes “back filling”. Finally everything is documented in code books complete with a CD. She shares her data, when she can, through archives, online, and even the postal mail. I asked Burke which of the processes was the most difficult or time-consuming, and she said, without a doubt, the data-entry was the most difficult.

Burke’s abstract: This brief talk will summarize the work of the Data Management Center, from consulting on methodological designs to preparing data to be archived. The talk will provide an overview of the types of data that are typical for psychological research and the strategies we have developed to maintain these data safely and efficiently. Processes for data documentation and preparation for long-term archiving will be described.

Up next was Maciej Malawski (Center for Research Computing, University of Notre Dame & AGH University of Science and Technology, Krakow, Poland) and his “Prospects for Executable Papers in Web and Cloud Environments”. Creating research data is one thing, making it available is another. In this presentation Malawski advocated “executable” papers” — applications/services embedded into published articles allowing readers to interact with the underlying data. The idea is not brand new and may have been first articulated as early as 1992 when CD-ROMs became readily available. Malawski gave at least a couple of working examples of the executable papers citing myExperiment and the Grid Space Virtual Laboratory.

Malawski’s abstract: Recent developments in both e-Science and computational technologies such as Web 2.0 and cloud computing call for a novel publishing paradigm. Traditional scientific publications should be supplemented with elements of interactivity, enabling reviewers and readers to reexamine the reported results by executing parts of the software on which such results are based as well as access primary scientific data. We will discuss opportunities brought by recent Web 2.0, Software-as-a-Service, grid and cloud computing developments, and how they can be combined together to make executable papers possible. As example solutions, we will focus on two specific environments: MyExperiment portal for sharing scientific workflows, and GridSpace virtual laboratory which can be used as a prototype executable paper engine.

Patrick Flynn (Professor of Computer Science & Engineering, Concurrent Professor of Electrical Engineering) seemed to have the greatest amount of experience in the group, and he shared it in a presentation called “You want to do WHAT?: Managing and distributing identifying data without running afoul of your research sponsor, your IRB, or your Office of Counsel”. Flynn and his immediate colleagues have more than 10 years of experience with biometric data. Working with government and non-government grant sponsors, Flynn has been collecting images of people’s irises, their faces, and other data points. The data is meticulously maintained, given back to the granters, and then licensed to others. To date Flynn has about 18 data sets to his credit, and they have been used in a wide variety of subsequent studies. The whole process is challenging, he says. Consent forms. Metadata data accuracy. Licensing. Institutional review boards. In the end, he advocated the University cultivate a culture of data stewardship and articulated the need for better data management systems across campus.

Flynns’ abstract: This talk will summarize nine years’ experience with collecting biometrics data from consenting human subjects and distributing such data to qualified research groups. Key points visited during the talk will include: Transparency and disclosure; addressing concerns and educating the concerned; deploying infrastructure for the management of terabytes of data; deciding whom to license data to and how to decline requests; how to manage an ongoing data collection/enrollment/distribution workflow.

In “Globus Online: Software-as-a-Service for Research Data Management” Steven Tuecke (Deputy Director, Computation Institute, University of Chicago & Argonne National Laboratory) described the vision for a DropBox-like service for scientists called Globus Online. By exploiting cloud computing techniques, Tuecke sees a time when researchers can go to a website, answer a few questions, select a few check boxes, and have the information technology for their lab set up almost instantly. Technology components may include blogs, wikis, mailing lists, file systems for storage, databases for information management, indexer/search engines, etc. “Medium and small labs should be doing science, not IT (information technology).” In short, Tuecke advocated Software-As-A-Service (SaaS) for much of research data.

Tuecke’s abstract: The proliferation of data and technology creates huge opportunities for new discoveries and innovations. But they also create huge challenges, as many researchers lack the IT skills, tools, and resources ($) to leverage these opportunities. We propose to solve this problem by providing missing IT to researchers via a cost-effective Software-as-a-Service (SaaS) platform, which we believe can greatly accelerate discovery and innovation worldwide. In this presentation I will discuss these issue, and demonstrate our initial step down this path with the Globus Online file transfer service.

The final presentation was given by Timothy Flanagan (Associate General Counsel for the University), “Legal issues and research data management”. Flanagan told the audience it was his responsibility to represent the University and provide legal advice. When it comes to research data management, there are more questions than answers. “A lot of these things are not understood.” He sees his job and the General Counsel’s job as one of balancing obligation with risk.

Summary

Jarek Nabrzyski (Center for Research Computing) and I believe Data Management Day was a success. The event itself was attended by more than sixty-five people, and they seemed to come from all parts of the University. Despite the fact that the presentations were only fifteen minutes long, each of the presenters obviously spent a great deal of time putting their thoughts together. Such effort is greatly appreciated.

The discussion after the presentations was thoughtful and meaningful. Some people believed a larger top-down effort to provide infrastructure support was needed. Others thought the needs were more pressing and the solution to the infrastructure and policy issues needs to come up from a grassroots level. Probably a mixture of both is required.

One of the goals of Data Management Day was to raise the awareness of all issues research data management. The presentations covered many of the issues:

- collecting, organizing, and distributing data

- data management plans

- digital asset management activities at Notre Dame

- institutional review boards

- legal issues surrounding research data management

- organizing & analyzing data

- SaaS and data management

- storage space & infrastructure

- the use of data after it is created

Data management is happening all across our great university. The formats, storage mechanisms, data modeling, etc. are different from project to project. But they all share a set of core issues that need to be addressed to one degree or another. By bringing together as many people as possible and facilitating discussion among them, the hope was to build understanding across our academe and ultimately work more efficiently. Data Management Day was one way to accomplish this goal.

What are the next steps? Frankly, we don’t know. All we can say is research data management is not an issue that can be addressed in isolation. Instead, everybody has some of the solution. Talk with your immediate colleagues about the issues, and more importantly, talk with people outside your immediate circle. Our whole is greater than the sum of our parts.